10 Best Email Marketing Services for Businesses in 2024

The most in-depth test of email marketing services ever performed

To find the absolute best, we personally tested dozens of email marketing services. We devised a professional and methodological approach and proceeded to put every little feature to the test – from setting the marketing operation to actually blasting emails and analyzing the results. We also kept a very close eye on support, contacting agents repeatedly to see who you could actually count on in your time of need.

After months and months of intense testing and borderline-obsessive scrutiny, we’re proud to present you with our definitive ranking of the 10 best email marketing services available today. Our report should answer every question you might have, including every service’s strengths, weaknesses, and recommended use cases.

The verdict is in. Let’s see which service is best for you.

-

![active-campaign-email-editor-2-850x435]()

- Feature-packed plans for growing businesses

- All plans allow unlimited sending

- Extensive automation and CRM options

- Excellent value for your money

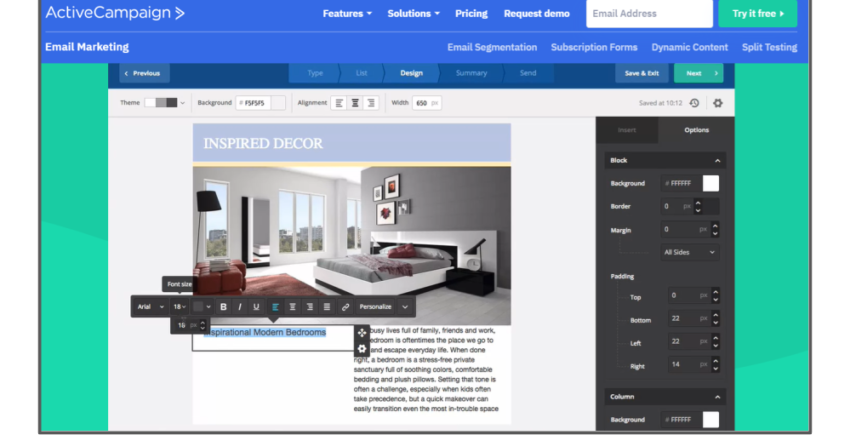

ActiveCampaign is on this list because I think it gives you great value for your money, especially given the sheer volume of useful features it offers – and not just for email marketing. It’s a great all-rounder if you’re a growing business, including CRM (customer relationship management) tools, social media marketing tools, and machine learning. We recently put it through its paces and updated our review of ActiveCampaign, complete with performance, spam and deliverability tests.

The email editor is quick and easy to navigate, with a lot of personalization options. But one of my favorite things about ActiveCampaign is its automation tools. You can send broadcast, triggered and targeted emails, autoresponders, funnel emails, and schedule your emails for specific dates and times and people. I found it really easy to work with the drag-and-drop automation builder.

ActiveCampaign comes with a lot of built-in features, so it took me a lot longer to pick up than other services. I still think it’s a worthy investment, considering the powerful tools you get in return.

ActiveCampaign offers 14-day free trial – not very long compared to other platforms, but no credit card is required and there’s no extra setup.

-

![Screenshot-2023-06-27-154458]()

- Affordable plans for growing businesses

- High-quality, easy-to-use tools

- SMS marketing in addition to email campaigns

- Free plan lets you send 300 emails per day

Brevo (formerly Sendinblue) makes some bold promises on its website, pledging to be the right solution for literally every type of business. I may have been skeptical that it would be right for me, but in my recent, in-depth review, I tested it out and it did not disappoint. In fact, I’ve just updated my review with more performance and deliverability tests that you’ll want to check out before we go any further.

It has 60+ email templates to choose from – not as many as other services, but they are all high quality (plus there’s the option to create your own). There are also around 50 integrations to choose from – again, not a lot, but all the ones you’ll actually want are there, with some great options for e-commerce sites too. Brevo is all about quality, not quantity.

You don’t need any email marketing experience to use Brevo’s tools, not even for the potentially complicated ones. Take email automation workflows for example. This could be risky, “advanced” email marketing territory, but there are nine common workflow templates already set up for you to add to the editor, which by the way, is super intuitive.

Another thing I really appreciate about Brevo is its free plan, which allows you to send up to 300 emails a day. This is a lot, considering most other services will give you a similar cap of emails per month. Also, unlike other services that will only give you a free trial period, you can use Brevo’s free plan for as long as you like. So, if this is what you’re after, take a look at our list of the best (really) free email marketing tools.

More on Brevo (formerly Sendinblue)

Visit Brevo (formerly Sendinblue) > Read our Brevo (formerly Sendinblue) review -

![AWeber’s smart email designer – best email marketing solutions]()

- Top-tier service with years of experience

- Industry-leading email deliverability

- Over 6,500 free stock images available

- More than 850 integrations

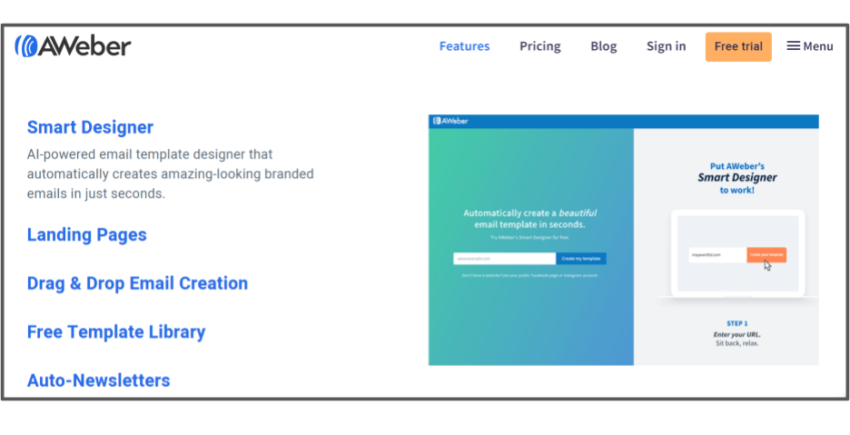

Even though AWeber has been around for years, it really surprised me. I used its AI-powered ‘Smart Designer’ to create branded emails just by entering my website URL. Even though I was satisfied with the initial results, the drag-and-drop email editor gave me full control of customization.

As for integrations, AWeber is into them in a BIG way. From e-commerce and website builders to SMS and social media apps – you name it, there’s an app to integrate it with your emails. There are currently over 850 integrations, with more being added all the time.

But what really sets AWeber apart from the competition? It may surprise you to learn that a lot of services outsource the delivery of your emails – one of the many reasons your emails may end up in the spam folder. AWeber controls everything end-to-end to ensure a higher deliverability rate, and that’s what we’re all really looking for in an email marketing service, right? Plus, it now offers a free plan as opposed to a free trial so you don’t need to spend a dime on your email marketing.

-

![omnisend]()

- Email marketing designed specifically for web store owners

- Easy-to-use automation features even on the free plan

- Highly customizable drag-and-drop editor

- Impressively generous free plan

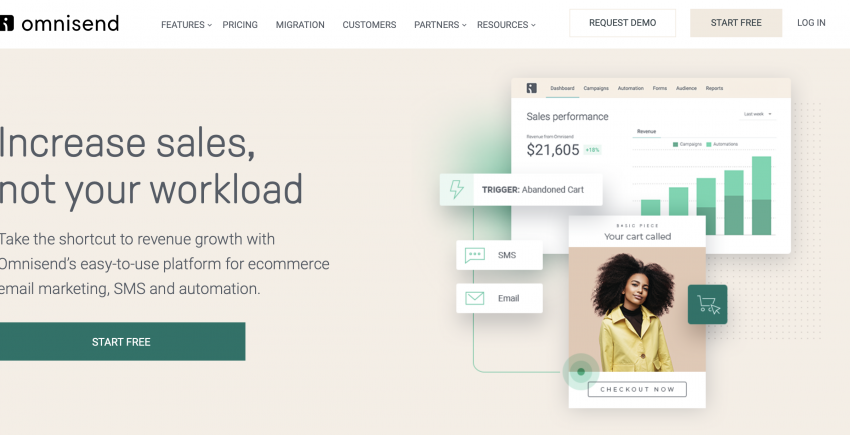

Omnisend offers a unique email marketing proposition: it’s a service for web store owners only. You have to have an e-commerce site in order to use it.

If you’re not looking for a way to boost your store’s online sales through email marketing, then you can save yourself a read – Omnisend isn’t the service for you. But you can find plenty of alternatives on this very list.

That said, Omnisend is a solid choice when it comes to core email marketing features. It covers all the basics – sign-up forms, simple segmentation and list management options, A/B testing, flexible automation workflows, and a drag-and-drop email editor for creating campaigns.

Its biggest strength is how it’s tailored to promoting online stores. It pulls sales and customer data straight from your e-commerce platform, and you can sell directly from your emails with a couple of clicks.

It’s worth noting, however, that Omnisend’s strength is also its weakness. For example, while the sales-oriented analytics are great, the purely campaign-oriented stuff is pretty basic. It’s the same story with the product-focused templates, and with the limited use of landing pages.

That aside, it’s beginner-friendly and comes with an impressive free plan, which allows you to send up to 500 emails per month. Sure, that’s not a lot, but it also gives you access to all the core features available on the Standard and Pro plan, and that’s the impressive part.

-

![Mailchimp email templates – best email marketing solutions]()

- Build email campaigns in minutes

- 300+ app integrations

- Award-winning support team

- Excellent real-time reporting

Ah, Mailchimp. It’s one of the biggest names out there, and I had to include it on this list. There’s a reason, after all, that over 11 million people currently use it. It literally took me minutes to put together a professional email campaign, so it’s no surprise it’s popular with just about everyone.

What I like most about Mailchimp is that it’s not just a powerhouse in email marketing. It’s got website building and domains covered too – and who doesn’t appreciate a service that lets you manage everything in one place?

Even better, I was able to manage everything from the mobile app. It might not be as easy to use as the desktop version, but I was able to track campaigns as well as create, edit, and preview mobile designs on the go.

And once I’d sent my email campaigns out, Mailchimp’s analytics and real-time reporting were great for further optimizing. They even sent me personalized recommendations and data-driven insights, and a service that helps me without me even asking is a winner in my book.

-

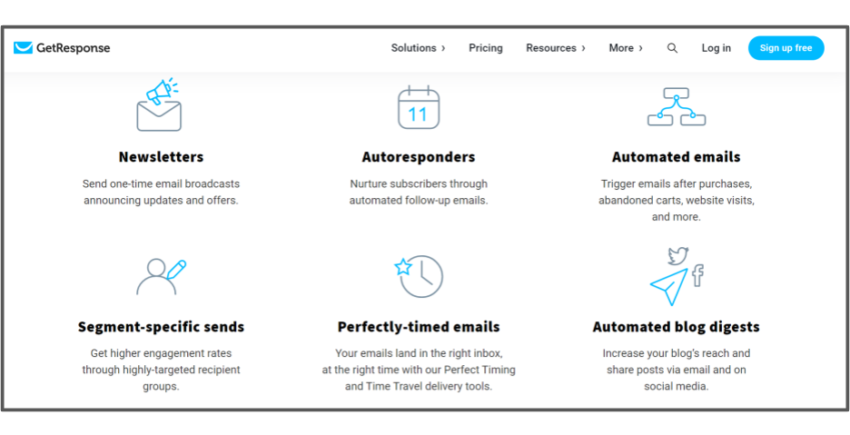

![GetResponse email marketing features – best email marketing solutions]()

- An excellent solution for small businesses

- Impressive 99% email deliverability rate

- Spam checker and other optimization tools

- 1000 free stock photos included

If you already have some experience with email marketing, you might be looking for a service that can do a little more. For me, that’s GetResponse. It’s been designed with larger businesses and growing teams in mind, so it might not be the best choice for beginners. But if you’re a smaller business looking to scale up one day, it’s got some tools that may impress you – I know they impressed me when I put together our fully tested, in-depth review recently!

While other services will offer you basic split testing and list management, I found that GetResponse offers a lot more, and for a better price. I’m talking four types of A/B testing, over 130 integrations, a spam checker tool, and handy features like the ‘Perfect Timing’ tool to help you send out emails at the optimum time… for each individual contact.

Yes, there’s a lot to keep you busy, and it took me longer to learn how to use the platform than some of the others on this list, but once I got to grips with the different types of split testing, this was seriously effective in helping me improve my email campaigns.

There’s a reason why GetResponse can boast a 99% deliverability rate – its tools really work, and I can vouch for that. You can even take advantage of the 30-day free trial to try out (almost) all the tools before you make a long-term commitment.

-

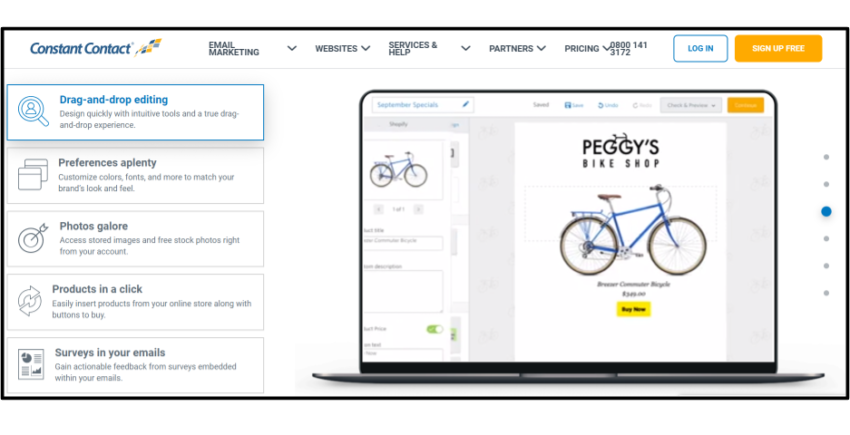

![Constant Contact’s drag-and-drop editing tool – best email marketing solutions]()

- The most beginner-friendly service around

- 240+ high-quality email templates

- Free 60-day basic trial

- Real-time tracking tools

After testing all the major email marketing services out there, I can say that Constant Contact is definitely the easiest email marketing tool to use.

I felt as though the company had really considered the user experience and functionality at every step. From its super accessible reporting dashboard to its intuitive drag-and-drop email editor, it could not have been simpler for me to use.

Even better, I never felt like Constant Contact’s simplicity meant the quality was compromised. I had access to hundreds of professional templates and was able to customize every aspect of my emails – nothing was off-limits. I was even able to create a branded template using my website’s URL. Constant Contact automatically scanned it and pulled my brand logo and colors for me.

I was slightly disappointed that Constant Contact doesn’t offer a free plan (like some of the others on this list), but its 60-day free trial is the longest free trial I’ve seen. I was able to get comfortable with the new tools before I committed to anything.

-

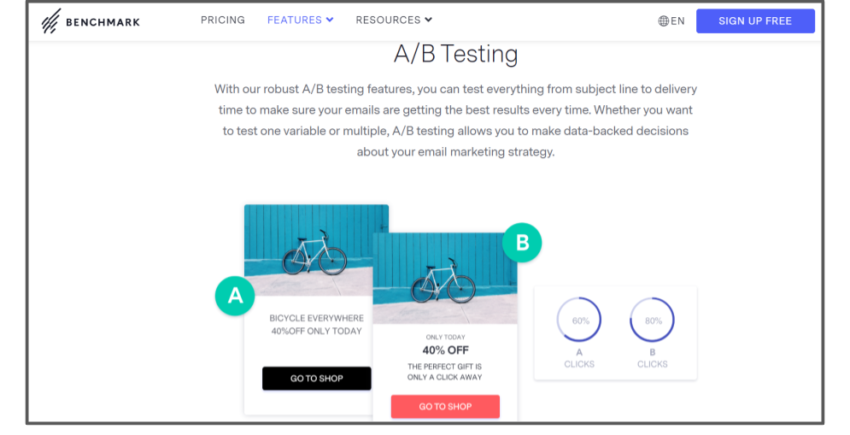

![Benchmark’s A/B Testing features – best email marketing solutions]()

- Simple, powerful tool for beginners

- Smooth, easy-to-use, block-based editor

- 24/7 support in multiple languages

- Advanced automation features with Pro plans

If you take a look at Benchmark Email’s website, you might be tempted by the platform’s snazzy interface alone. I get you – it’s pretty, but what you may be interested to know is that the contents are just as good as the cover. Yep, you don’t make it this high on the list without really impressing me.

So, what’s so good about it? Well, there’s a simple email editor that provides easy block-style editing, and a simple form builder that allows you to easily customize your forms. There’s also a simple workflow builder for easy automation, that comes with 10 pre-made templates to get you started.

Benchmark also offers a free email marketing plan. It does have some limitations, but it gives you enough to get you up to speed with the platform. I recommend upgrading to a Pro plan to access that all-important A/B testing.

The free plan does, however, get you access to 24/7 hour customer support (something that other services save for paying customers). Support is available in nine different languages – nice one, Benchmark.

-

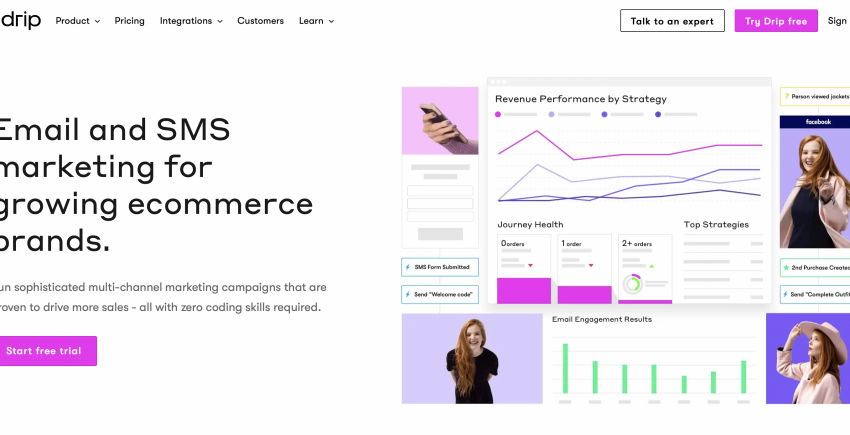

![Drip email and sms]()

- One of the best email template creators

- Easy subscriber filtering and management

- Over 100 third-party integrations

- Fairly detailed analytics

Drip is clearly aimed at people selling stuff online. AKA, the e-commerce crowd. It’s designed to integrate with a variety of online store platforms to help you track sales, and to design email campaigns and automated workflows around your products.

But even without an online store attached, it’s a downright decent email marketing platform with one of the better email template designers I’ve seen. I mean, it really has to be a good template designer, because there are only 7 premade templates. Oh, and there’s no landing page creator.

Still, if that sort of pure-email-and-e-commerce focus sounds right to you, Drip is a solid choice. The subscriber management tools are streamlined and effective. You can integrate your email platform with loads of other services, and the pricing plans aren’t bad, either.

I did like the analytics in particular. While I wasn’t able to generate enough data to make all of the graphs look pretty, I was impressed with how detailed the statistics could be. The analytics platform is also designed to integrate with your online store and keeps track of how your whole online business is doing, not just your email marketing.

-

![Moosend]()

- Easy to learn and use

- A strong A/B testing system

- Integration with Unsplash and Giphy in the email editor

- Interesting automation features

Moosend has an absolutely solid set of general email marketing features. And I couldn’t be happier with that. Whatever you need to actually send out emails is here. It’s easy to learn and easy to use, while also being powerful and flexible.

I was particularly impressed with the automation system, which allows you to trigger email campaigns and workflows in interesting ways. For example, I’ve never seen a system that lets me send out emails based on my subscriber’s local weather before.

The A/B testing system lets you test different kinds of content, including subject lines, of course. But where it gets interesting is that it also lets you test different “senders” – so you can see whether your readers prefer getting emails from “Derek at YourCompany”, or from “YourCompany Inc”. This feature is pretty rare.

Combine all of that with a generous free plan, decent pricing, and a library of good-looking templates, and you have one of our top ten picks for email marketing.

The Global Email Marketing Services Comparison Project – How We Did It

Having developed custom, enterprise-level email marketing systems myself, I approached this project with my own experience in mind. It’s not that email marketing services are hard to develop, per se. But they require attention. They require some developer love. More than anything, email marketing services require a deep, deep understanding of what users actually need. While I was (almost) sure that some services were going to get it right, I knew that wasn’t always going to be the case. It’s the world we live in. Many companies decide that it’s more cost-efficient to rush development, and instead focus on marketing. Nothing’s cheaper than promises, and god are these services big on promises. Not a single email marketing service promised me anything less than the most powerful, technologically advanced platform available, equipped with every tool I could possibly need to succeed. And build. And grow. Honestly, it all started sounding a bit too much like a self-help book. There was no escaping it – I was going to have to sign up to each service and actually use it in order to see what it was capable of. Luckily, my generous project manager gave me carte blanche on the budget, which made things easier. So, how do you find which email marketing service is actually the best? Unlike many other internet-based services, there aren’t that many email marketing platforms. Dozens, at most. That fact alone made me happy, as I knew my colleague, who was in charge of web hosting services, was at his wit’s end. You see, he was having to test literally hundreds of hosts. It’s the little things in life, isn’t it? The task became pretty straightforward. Make a list of every email marketing service available today, consult my peers to devise a thorough testing methodology, and off we go. Was it quick? No, no it wasn’t. It took me almost a year if I’m being honest. But I wasn’t interested in being quick. I wanted to actually use these services. Having done so, I’m very proud to present you with the results. One quick note – I tested every email marketing service that was available in English. There are other languages I do not speak, so some language-specific services were beyond my reach. Remember that carte blanche budget I mentioned? That came in handy. I proceeded to hire 25 email marketing experts from all over the world, who followed my methodology and tested every local service. To see their results, change the language in the upper-right corner of this page.Which Are the Top Email Marketing Services Available Today?

I won’t pull your finger any longer. Out of the dozens of services I tested, most didn’t make the mark. In fact, many didn’t even come close. Some seemed to have halted development about five years ago, while others lacked any understanding of what an email marketing service is supposed to do. But the ten services I’ve gathered on this page? Oh, they did alright. Not perfectly, because that’s not really a thing, but definitely alright. Each has its weak points, but I can tell you this without a doubt – these are the best email marketing services money can buy. I’ll soon explain exactly how I tested the services, and what the results were, but take a moment to bask in their glory. Then take another moment to read the full review, if you will.- ActiveCampaign – Full Review

- GetResponse – Full Review

- Benchmark – Full Review

- Brevo (formerly Sendinblue) – Full Review

- AWeber – Full Review

- Constant Contact – Full Review

- Drip – Full Review

- Moosend – Full Review

- Mailchimp – Full Review

- Omnisend – Full Review

If You Want to Test an Email Marketing Service, You’ve Got to Actually Use It

An email marketing service is no one-and-done tool. It’s not a logo creator or some file converter, which has to take you quickly from point A to point B. An email marketing service is the ongoing heart of your entire online business operation. So the only way to test it is to use it for that purpose. That means exactly what you think it does. After signing up for a plan, I proceeded to use each service as if I was running a very real marketing operation. Importing contacts, setting up landing pages and automations, and blasting them emails. After that, it was time to analyze the results, segment my audiences accordingly, and repeat. And repeat. And repeat. Because email is a fickle technology. It doesn’t just “work”. It needs time, and effort, and tinkering. Which is exactly what I gave it. That’s the only way to let the service reveal itself and to see if it actually delivers. And lemme let you in on a little secret about deliverability. Those “email marketing service deliverability figures” you might’ve encountered elsewhere? Pretty numbers like 84%, 97%, or a dreadful 54%? They’re meaningless. Completely made up, and of no consequence to you. I wrote an entire article about why that is, but simply know this – it’s what the service offers, plus what you put into it, that will make your email marketing operation fly. Regardless, I had planned to use these services for several months, but before that, I needed to organize my methodology. It is true that I have collaborated with companies to develop customized in-house email marketing systems, so I can confidently say that I know what I need. However, I was seeking something beyond that. Which is why I called upon my friends and peers in the email marketing community to come together and honor the sacred pact we made long ago. Using our collective hivemind, which was a sight to behold in itself, we created what is by far the most detailed testing guide I’ve ever seen. Want to see it? You can’t. It’s now a patented trade secret. Just kidding – but like, you really can’t. We’re paranoid about other websites trying to steal our mojo, and stuff. But what I can give you is the gist. Because we’ve all worked with big-money companies that have all sorts of advanced needs, we knew how good each feature can get when custom-developed (i.e, has tens of thousands of dollars put into it). And we knew exactly which features mattered. As such, we illustrated specific test cases for every important feature and aspect of each service. Simply put, we tested how close each service came to a dream email marketing system, one developed to specifically suit an enterprise client’s needs.Results and Analysis – How Did the Services Actually Do?

My brain can’t even remember how many phone calls I’ve had with support agents, how many contact lists I’ve imported, and what A/B testing brought me where. But it doesn’t need to, because I’ve gathered everything in beautiful spreadsheets. Isn’t Excel great?

What, you switched to Google Sheets? Shame on you.

Anywho, I went and organized it all so that we’ve got five sections for each service:

- Features

- Deliverability

- Analytics

- Support

- Pricing

Features

Not surprisingly, features made up the bulk of my research. There are a ton to check for and to test. These are going to be your marketing tools, and the better they are, the better you’ll do.| ActiveCampaign | GetResponse | Benchmark | Brevo | AWeber | Constant Contact | Drip | Moosend | Mailchimp | Omnisend | |

| Third-party integrations | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Template selection | 5 | 5 | 4.5 | 5 | 4.5 | 5 | 2 | 4.5 | 2 | 2 |

| Email editor | 4.5 | 4.5 | 5 | 4.5 | 5 | 4.5 | 5 | 4 | 3 | 3 |

| Mobile responsiveness | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Stock photos | ✘ | ✘ | ✘ | ✘ | ✔ | ✘ | ✘ | ✔ | ✘ | ✘ |

| RTL support | X✔ | ✔ | ✔ | ✔ | ✔ | ✘ | ✔ | ✘ | ✘ | ✘ |

| Simple personalization | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Dynamic content | ✔ | ✘ | ✘ | ✔ | ✔ | ✘ | ✔ | ✔ | ✘ | ✔ |

| A/B testing | 3 | 4.5 | 5 | 5 | 5 | 3 | 5 | 5 | 4 | 3 |

| Import formats | CSV | CSV,TXT, Excel, VCF, ODS | CSV, TXT, Excel | CSV, TXT, Excel | CSV, TSV, TXT, Excel | CSV, TXT, Excel, VCF | CSV | CSV, TXT, Excel | CSV | CSV, Excel |

| Third party imports | ✔ | ✔ | ✔ | ✘ | ✘ | ✘ | ✔ | ✔ | ✘ | ✔ |

| Segmentation | 4 | 5 | 5 | 5 | 4 | 5 | 5 | 4 | 4 | 5 |

| Suppression list | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Landing pages | 5 | 5 | 5 | 3.5 | 4 | 3.5 | – | 4.5 | 3.5 | 3 |

| Signup forms | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Automation | 5 | 4 | 4 | 5 | 4 | 3 | 5 | 5 | 2 | 4 |

| Final features score | 4.8 | 4.8 | 4.8 | 5.0 | 4.6 | 4.3 | 4.0 | 4.5 | 4.0 | 4.0 |

Third-party integrations

Email marketing services haven’t been just about emails for a long time. It’s 2024, and if you have something to say to your subscribers, you’ll probably want to blast it on multiple channels. Great email marketing services allow you to do just that. When creating an email to send to your list, you’ll be able to simultaneously post its content on social media channels and other third-party services. GetResponse really shines here, with easy simultaneous publishing on Facebook, Instagram, and many other platforms.Template selection

Instead of wasting your time on designing your emails from scratch, templates allow you to just fill in the blanks and blast away. You’ll require different templates for different types of emails – a newsletter update is very different from a catalog email, or a promotional call to action. Let’s not forget that in addition to being eye-catching and stylish, templates also need to be lightweight and well coded. A lot of variance was observed here, with ActiveCampaign and Constant Contact truly knocking it out of the park. The same cannot be said for Mailchimp and Drip. The former seems to think it’s still the 90s, while the latter has a selection so minimal it’s embarrassing.Email editor

While templates are going to be your email’s backbone, you’ll probably need to tweak them. An image here, a paragraph there, a different page layout – whatever fits your content and brand. Great editors are drag-and-drop, point-and-click experiences, the kind that’s so self-explanatory you’ll just know what to do without ever having used it before. That’s actually the situation with most services, although Benchmark really shined with its advanced editor capabilities. ConvertKit does the opposite, and I had such a bad time using it that even just remembering the experience makes me want to lie down.Mobile responsiveness

You probably already know this yourself, but I can’t stress enough the importance of mobile responsiveness. Most of your readers are going to be using their mobile phones, and a non-responsive email might as well go straight to spam. Unlike the services that didn’t make this list, our top ten all did a good job here. They’re all winners, but I’ll commend Mailchimp for letting you simulate exactly how your email will look on dozens of different phones and tablets.Stock photos

An email marketing service can still be phenomenal without providing free stock photos, but doing so makes your life so much easier. Searching the internet for the right image takes time and effort, and the good ones usually cost money. A service with stock photos saves you that trouble. AWeber and Moosend were the only providers to offer stock photos as part of the service.Right to Left (RTL) support

This is relevant for content in RTL languages like Arabic, Hebrew, and Farsi. Not doing that? Move along to the next feature. But if you’re planning on writing in one of these languages, you absolutely have to choose a service that provides RTL support. It was funny to see how some services, like Drip, weren’t sure if they supported RTL. They checked, and they do. While it might require some HTML knowledge, it’s nothing that support can’t help with. All services except Constant Contact and Moosend do alright here. These two don’t support RTL at all.Simple personalization

Instead of saying “Dear reader”, utilize the data you have on your subscribers and say “Dear John”, or “Dear Sandy”. This isn’t just a nice, superficial touch. Using your subscriber’s name creates a feeling of closeness that immediately makes them feel more inclined to read what you have to say. All services did a good job of implementing personalization.Dynamic content

This is personalization’s big brother. It lets you use the data you have to customize content. For example, you could send a different image depending on your reader’s gender. Or phrase your content to better suit readers from a certain geographical location. It’s a rare feature to have on all but the most expensive plans, but with ActiveCampaign you’ll have it from the get-go. Even better, ActiveCampaign’s intuitive interface lets you easily include as much dynamic content as your heart desires.A/B testing

A super-useful feature that’s going to become your best friend. Unsure about a certain phrasing in your subject line, or the image you’re using? Don’t just send a regular blast. Create an A/B test, and create two versions of the same email. The platform will then send version A to a small subsection of your mailing list, and version B to another. The version that gets the best click/open response will “win”, and will automatically be sent to the rest of your list. While this is a pretty common feature, some services provide very buggy and unreliable A/B testing. And others limit your test to the subject line only. GetResponse and Benchmark are the winners here, in my opinion.Import formats

When importing a contact list, you’ll be stuck with whatever format you were given. Usually, it’s a CSV, which is why supporting CSVs is the bare minimum for most email providers. But the more file types and import formats that are supported, the more freedom you’ll have. Credit to GetResponse and AWeber here, and a surprising thumbs down for ActiveCampaign.Third-party imports

Sometimes, you might have a contact list you want to import straight from a third-party service, like a CRM or a competing email marketing platform. Good sports Benchmark and GetResponse let you do exactly that, while the usually technologically great AWeber disappoints.Segmentation

One of the most important features of an email marketing service, if not the most important feature. A mailing list is comprised of contacts, which you’ll be gathering left and right, and they will be the recipients of your email marketing. But a mailing list is far from a static thing. At least, that’s the case with a great service. Instead of simply blasting your emails to members of a certain list, segmentation allows you to go deeper. How deep? Well, I’m a big fan of complete flexibility. I want to be able to use all the information I have on a contact. To segment by gender, origin of signup, birthdate, location – anything. And to then tailor specific emails that each segment will respond to. But that’s not all. Behavioral segmentation is the really powerful stuff. This means being able to segment by reaction to your previous emails. For example, by singling out a specific campaign, and sending out emails only to people who clicked it – the highly engaged users. Segmentation is also a great way to clean up your mailing list and remove users who aren’t engaging with your content, and are just eating up space. Brevo, formerly Sendinblue and Benchmark are the big winners here. With complete control over your lists, and the ability to create segments using multiple rules and conditions, I felt like they really let me utilize the information I’d gathered.Suppression lists

Email marketing can get dirty. Your competitors might sign up to your newsletters using fake or spammy addresses, just to hurt your sender reputation. To avoid such problems, you’ll want to use a suppression list, which allows you to create rules and specify email addresses that will never receive your emails. All services did a pretty good job here. I’d say that GetResponse made things especially easy, but really all the services pulled through.Landing pages

Also known as mini-sites. Because this is what good email marketing services do – so much more than the minimum. They let you create landing pages that you can use to funnel readers into signing up for your list and also to promote events, products, and more. This saves you the need to purchase hosting and build a website, especially for small and limited-time projects. Complete with a drag-and-drop editor, and accessible from your main domain, landing pages let you take full control of online marketing. It’s a crying shame that Drip doesn’t offer landing pages at all. All others do, but with varying degrees of success. Mailchimp’s landing pages are very problematic, and I found myself extremely frustrated with them. ActiveCampaign won my heart with an excellent editor and ready-made templates that allowed me to get beautiful one-pagers online in about half an hour.Signup forms

When building a landing page with your email marketing service, you’ll probably add a newsletter signup form to expand your reader base. But what if you already have a website? Or if a partner wants to promote you? For that you need a signup form you can “paste” into another website using a simple link. I found that many services didn’t provide such an option but, of course, they never made this list. All ten that you see here did. Who did it with the most style? Maybe GetResponse. I guess I just like their intuitive graphic interface.Automation

The ultimate feature. The one that truly separates the best services from the merely good ones. It saves you time, it connects you with your readers, and it’s a beautiful use of technology. The options are unlimited (with good automation!), but the idea is simple: create rules and scenarios, and decide on an automated reaction sequence. Send a personalized birthday email with a coupon on each contact’s birth date? Check. Create a welcome email chain that will send at predetermined intervals, and will let your readers get to know you? Check. Automate transaction receipts, customer support responses, or even emails to bring an unengaged reader back into the fold? Check, check, check. Automation is also where some of these services faltered. Even on the advanced plan, Mailchimp really limits what you can do. And Constant Contact’s cumbersome method of automatic email didn’t sit well with me. ActiveCampaign, Brevo, and ConvertKit, on the other hand, got it absolutely right. They let me do anything I wanted with almost limitless functionality, easy drag-and-drop interfaces, and the ability to create extremely detailed scenarios.Features – Bottom Line

On paper, Brevo, formerly Sendinblue, is the absolute champion where features are concerned. A perfect score. Does that mean Brevo should definitely be your choice? While its template selection is astounding, and advanced features like automation work like a charm, there’s still a lot to say for the other services. ActiveCampaign, GetResponse, Benchmark, and AWeber all deliver excellent results, albeit in a slightly different way. I happened to like Brevo’s way the best, but that’s me. There is nothing you can’t do with these five services – it’s mostly about how easy or straightforward it was for me. What about Constant Contact, Drip, Moosend, Mailchimp, and ConvertKit? Well, they lag behind. Quite a bit, I might add. Mailchimp was buggy, ConvertKit’s template selection is abysmal, and the way they all handle A/B testing and landing pages just isn’t as smooth.Deliverability

I’ll say it again – there are no funny-looking percentages here. That’s a myth. I’m talking about the tools, policies, and security measures that each service has. Use a service that doesn’t have them, and you risk not getting delivered. Simple as that.

| ActiveCampaign | GetResponse | Benchmark | Brevo | AWeber | ConstantContact | Drip | Moosend | Mailchimp | Omnisend | |

| DKIM Authentication Check | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Active Spam Policy | ✘ | ✔ | ✔ | ✘ | ✔ | ✘ | ✘ | ✔ | ✘ | ✘ |

| Affiliate Marketing Policy | Allowed but limited | Allowed | Allowed but limited | Allowed but limited | Allowed but limited | Not allowed | Allowed but limited | Not allowed | Not allowed | Not allowed |

| Private IP | ✔ | ✘✔ | ✔ | ✔ | ✘ | ✔ | ✔ | ✔ | ✘ | ✔ |

| Final deliverability score | 4.5 | 4.6 | 4.8 | 4.5 | 4.5 | 4.5 | 4.0 | 4.8 | 4.6 | 3.5 |

DKIM Authentication

A service that didn’t offer DKIM authentication had no chance whatsoever of getting on this list. None. Without getting too technical, DKIM authentication lets you prove to your recipients that you are indeed the owner of your email address. The process will require some relatively heavy configuration, which might not be the most fun for those of you who are tech-averse. The services mostly differ here by the guides they have on the subject, how easy it was to generate the key, and how available the support agents were to guide me on the process. Kudos to ActiveCampaign and AWeber, prompt and professional as always. Authenticating with them was a cinch. And a dishonorable mention to Mailchimp, which hides its DKIM section so well I thought at one point it didn’t have it at all.Active Spam Policy

Short introduction first. With email marketing services, you share an IP with other users. Your emails will get sent from the same IP, and your IP’s “reputation” will affect everybody’s deliverability. If some users misbehave and get marked as spam, or worse, you might get hurt yourself. Could get as bad as you being blacklisted, despite your emails being completely fine. Because of this, we looked for services that fight spammers and minimize the chance for such tragedies. It’s important that they specify exactly how spam is forbidden in their terms of use (which they all do, more or less), but that alone isn’t enough. One of the most ingenious tests that we devised involved uploading a CSV filled with spammy contacts. You see, spammers are a lazy bunch. They buy contact lists, import them, and hope for the best. These lists quickly become known, and trying to upload one is a clear sign of a spammer. We attempted to import such a list, hoping to see the services “catch” it, which is exactly what happened with GetResponse, Benchmark, AWeber, and Moosend. All the others greatly disappointed us, as the import went undetected.Affiliate Marketing Policy

Two things. First, whether you’re an affiliate marketer or not, you still want the service to have a clear and concise policy on the issue. Second, if you aren’t an affiliate marketer, then you definitely want to choose a service that forbids it. Affiliate marketing isn’t illegal, but let’s just say it’s prone to get sketchy. Somewhere between the line of spam and not-spam. If you’re not doing affiliate marketing, don’t risk using a service that allows others to do so. Constant Contact and Moosend are the best in this regard, strictly forbidding it. If you do happen to be an affiliate marketer, you’ll find that other services allow it, albeit on limited terms. Just know that one wrong move might get your account suspended.Private IP

Never available with basic, affordable plans, but usually part of the higher-tier ones, a private IP solves all of your “neighbor problems”. If you’re using a private IP, you don’t have to worry about them misbehaving and your email marketing getting hurt as a result. While it’s not surprising that the generally flimsy Mailchimp doesn’t have any plan with a private IP, the fact that AWeber doesn’t either was quite shocking. A GetResponse agent told me that they don’t have the option, but I found it actually is available on the most expensive plan. Go figure. All the rest do alright here, letting you purchase a private IP as a separate add-on, or as part of the higher-tier plans. Warning – it’s not cheap.Deliverability – Bottom Line

For me (and for you too, if we’re being honest – you just might not feel that way yet), the active spam test is the most important metric, and, as such, GetResponse, Benchmark, and Moosend are the winners here. The big loser here is Mailchimp, failing the spam test hard and not even having the option of a private IP. With the other six, I’d suggest keeping a very close eye on your deliverability performance. It might be ok, but if things look weird, definitely consider switching services or moving to a private IP.Analytics

One of the best parts of a good email marketing service is seeing it all come together. Just chilling in front of a detailed analytics board, watching glorious graphs and pie charts. Good analytics let you know exactly how you’re doing, and exactly what you need to do to get even better results.| ActiveCampaign | GetResponse | Benchmark | Brevo | AWeber | Constant Contact | Drip | Moosend | Mailchimp | Omnisend | |

| Opens | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Clicks | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Bounces | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✘ |

| Unsubscribers | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Location | ✔ | ✔ | ✔ | ✘ | ✔ | ✘ | ✔ | ✔ | ✔ | ✘ |

| Location world map | ✘ | ✔ | ✔ | ✘ | ✘ | ✘ | ✘ | ✔ | ✔ | ✘ |

| Graphical breakdown | 5 | 4.5 | 5 | 3 | 5 | 3.5 | 4 | 4 | 4 | 3 |

| Final analytics score | 4.7 | 4.2 | 5.0 | 4 | 5 | 3.8 | 4.5 | 4.5 | 4.6 | 4.4 |

Opens

Basic stuff. You want to be able to know who opened your email, and who didn’t. All did well.Clicks

Going a bit deeper – who actually clicked an element in your email, after opening it. You’d be surprised how many “services” today falter here. I sent hundreds of emails and had my lackeys – sorry, colleagues – specifically interact with elements in the emails to make sure the analytics were correct. It’s all good. These ten are the best there is, remember?Bounces

Not all your emails are going to reach their audience. Sometimes, a contact’s email inbox malfunctions, which results in a “soft” bounce – try again, and it might work. Other times, people will sign up with fake addresses that don’t exist, so your email to them will forever live in limbo. That’s a “hard” bounce. All services enabled me to view bounces, but hard and soft ones were always coupled together. I would’ve loved to have soft and hard bounces separated, but none of the services provided this. Systems I’ve helped develop did. I guess if it’s really important to you, invest the small fortune it takes to develop one.Unsubscribers

It hurts, but some of your subscribers are going to opt out. That doesn’t mean you’re the problem, necessarily. Or maybe it does. It helps to be able to see which emails resulted in people unsubscribing, so you can learn to avoid expensive mistakes. All services did okay here.Location

Aha! And so the services begin to diverge. Not all of the ten let me get a breakdown of my recipients by location. Which is a shame, because it’s such a nifty tool to have. Location breakdown once helped me realize I was under the attack of a competitor. How, you might ask? Because after sending a wine newsletter to what were supposed to be San Francisco-area contacts, my reports indicated hundreds of readers in the Philippines. I sent those IPs straight to my suppression list. Brevo, Constant Contact, and ConvertKit really disappointed me. No location breakdown with those guys. The others all did good, although something seems to be buggy with Mailchimp’s information. Readers that I knew were from the Middle East were marked as being from the US. I wouldn’t trust that one so much.Location world map

How about letting me quickly view how things look on a world map? This ingenious feature wasn’t available with all services that had location breakdown. ActiveCampaign, AWeber, and Drip – I’m looking at you. Implement this, pronto! GetResponse, Benchmark, Moosend did this beautifully.Graphical breakdown

Having data at your disposal is not the same as easily understanding it. I enjoy going over a 200-row table, sure, but I would love to get some visual aids. But visual aids doesn’t sound so good, so we called this “graphical breakdown” instead. The more, the merrier. Pie charts, bar charts, graphs, distribution plots – give me everything. But, sadly, not all of the services did. Oh no. Mailchimp and ConvertKit’s flimsy offerings are just not enough. The generally dependable Brevo also falters here. The winners? ActiveCampaign, Benchmark, and AWeber. With detailed graphs and charts for every type of report, from clicks over time to monthly subscriber growth, I knew exactly what was going on at a glance.Analytics – Bottom Line

Luckily, everybody did well regarding the basics – opens, clicks, bounces, and unsubscribers. That means all ten will give you the foundation to understand how your campaigns are doing. But who went above and beyond? That would be Benchmark. Hands down, it doesn’t get more detailed than this. A very close second is AWeber. If they only had that world map I love so much… Biggest loser? ConvertKit. If I was nice, I’d say they went minimalistic. But I’m not. And their analytics reports are simply bad. They just really dropped the ball on this.Support

All these platforms aim to provide a similar service, but each one does things a bit differently. Which is why you’ll probably end up needing some help every now and then, even if you know your stuff. If you’re a beginner, quality support becomes even more important.| ActiveCampaign | GetResponse | Benchmark | Brevo | AWeber | Constant Contact | Drip | Moosend | Mailchimp | Omnisend | |

| Support channels | Chat, email, phone | Chat, email | Chat, email, phone | Email, phone | Chat, email, phone | Chat, email, phone | Chat, email | Chat, email, phone | Chat | Chat, email |

| Average response time | Minutes | Minues | Hours | 5 hours | Minutes | Minutes | 1 hour (for email) | Minutes | Never available | Minutes |

| Support hours | Email 24/7, LC Mon-Thu 8 a.m. to 11 p.m., Fri 8 a.m. to 6 p.m., Phone 8 a.m. – 5 p.m. CST | 24/7 | Email and chat 24/7 Phone Mon to Fri, 6 a.m. to 5 p.m. PST | 24/7 | Email and live chat 24/7, Phone 8 a.m. to 8 p.m. EST | 24/7 | 9 a.m. to 5 p.m. CT | Not listed | 24/7 | Not listed |

| Professionality | 4 | 4.5 | 4 | 5 | 5 | 4 | 5 | 3.5 | – | 4.5 |

| Final support score | 4.7 | 4.9 | 4 | 4.5 | 3.7 | 4.3 | 4.5 | 3 | 3.5 | 4.7 |

Support – Bottom Line

So! Make sure the service has the support channel you wish to converse on, and make your choice accordingly. Beware of Mailchimp and Moosend, which are definitely the underachievers of the bunch.Pricing

How much will all this cost you, what are the options available, and how does it all compare? Put in even simpler terms – which of these services offers the best deal?

| ActiveCampaign | GetResponse | Benchmark | Brevo | AWeber | Constant Contact | Drip | Moosend | Mailchimp | Omnisend | |

| Basic plan price | $29.00 | $0 | $8.00 | $25.00 | $12.50 | $12.00 | $19.00 | $16.00 | $20.00 | $18.00 |

| Plan limits (subscribers/emails/both) | Subscribers | Emails | Emails | Emails | Subscribers | Subscribers | Both | Subscribers | Both | Subscribers |

| Long term discounts | ✘ | ✔ | ✔ | ✔ | ✔ | ✘ | ✘ | ✔ | ✘ | ✘ |

| Payment period (in months) | 1, 12 | 1, 12, 24 | 1, 12 | 1, 12 | 1, 12 | 1 | 1 | 12 | 1 | 1 |

| Payment options | Credit Card, PayPal, Wire, Check | Credit Card, PayPal | Credit cards, Wire, Check PayPal | Credit cards, PayPal | Credit and debit cards | Credit, PayPal, Amazon Pay, eCheck, Check | Credit card | Credit card, PayPal | Credit Card, PayPal | Credit Card |

| Free plan | ✘ | ✘ | ✔ | ✔ | ✔ | ✘ | ✘ | ✔ | ✔ | ✔ |

| Free trial | ✔ | ✔ | ✘ | ✘ | ✘ | ✔ | ✔ | ✘ | ✘ | ✔ |

| Money-back guarantee | ✘ | ✘ | ✘ | ✘ | ✘ | ✔ | ✘ | ✘ | ✘ | ✘ |

| Final pricing score | 4.7 | 4.8 | 4.5 | 4.5 | 4.5 | 4.3 | 3.7 | 4.3 | 4 | 4.6 |

The reason I’m asking you to think about these results with me is that your specific business needs play a huge part in this decision. I’ll show you what I mean.

Basic plan price

The numbers speak for themselves, as numbers usually do. The cheapest way to go is ActiveCampaign, without a doubt. No, the basic plan doesn’t have all the features the higher-tier plans come with, but it’s much more than what Mailchimp offers you for double the price. GetResponse, Benchmark, ConvertKit, and Moosend also do well here. If you can’t afford about a dozen dollars a month to run your business right, you, my friend, might be in the wrong business. On the more expensive side, we have Brevo, Drip, and Constant Contact. Are they worth it? Well, Drip could be, especially if you’re knee-deep into e-commerce. Brevo’s powerhouse features obviously carry a high price tag, so make sure you need what they offer.Subscriber limit vs email limit

Are you planning on sending frequent email blasts to a relatively limited or stable number of people? If that’s the case, you’ll definitely want to choose a service that limits subscribers, but not emails – ActiveCampaign or AWeber, for example. You’re going to send fewer emails, but you’re expecting your contact list to grow to thousands and thousands of users? Go with a service that limits emails, but not subscribers. GetResponse or Benchmark will do just fine. This decision is major, so I really suggest you think about it. The difference in cost can grow exponentially.Long-term discounts

You won’t find much in the way of major discounts in the world of email marketing services. Minor ones are available when signing up a year ahead, and the services sometimes run promotions, so you might get lucky.Free plan vs free trial

The concept of “free” sounds appealing, but it’s important not to deceive yourself. These individuals are not virtuous, and what seems initially “free” has the potential to transform into something “excessively costly.” This is the essence of free plans – they lure you in, prompting your dedication to hours of setup and configuration. Then, just as you realize your increased requirements, you find yourself ensnared. Even worse, a free plan is always extremely limited, so you won’t really know what to expect of the service. You’ll have a general idea, sure, but do you always run your business based on vague concepts and gut feelings? Remember what happened to Santino Corleone. As such, I’d truly recommend avoiding free plans. Free trials, like the one GetResponse offers, are much better. You get a free month to play around with everything the service can offer. By the end of it, you’ll have an excellent idea of what it’s capable of, and how you feel using it.Money-back guarantee

A bit of respect for my buyer’s remorse, that’s all I’m asking for. Sadly, most services don’t provide any money-back guarantee. The only two saints here are Constant Contact and ConvertKit. Now, I do think that the free trials that GetResponse and ActiveCampaign provide give you enough time to make an informed decision, but if you know yourself to be extremely flaky, take this into account.Pricing – Bottom Line

As I said before, this really depends on you. Understand your business plan, and choose what you want to be limited by – subscribers or emails. That will already narrow your choice in half. After doing that, don’t be distracted by free plans or beautiful celebrities. Do as you’ve been trained to do and understand that your business is worth investing in. A free trial is okay, too. If you’re one of those people who can barely make a decision, then know that ConvertKit does give you the greatest freedom – a free plan, a money-back guarantee, cheap prices all over. Not my favorite service, but undeniably budget-friendly. My personal recommendations would be ActiveCampaign for a subscriber-limited service, and GetResponse for an email-limited one. They’re powerful, well-supported, and very fairly priced, plus you can check them both out with a free trial.Final Conclusions and Recommendations

When you come right down to it, most of the big-name email marketing services do almost the same thing, with similar feature sets. That’s not a bad thing, as such. It just means that these services have seen what works, and have each tried to put their own spin on the formula. If it ain’t broke, don’t fix it. However, this relative uniformity makes picking an email marketing platform for the long term just a little bit harder. So, you have to go by what you need, specifically. What is your business about? How much time do you want to spend on your emails? What other online platforms do you want to integrate with your email marketing? ActiveCampaign claimed the top spot by providing the best overall experience, but if you want better A/B testing features, GetResponse or Benchmark might be for you. If you specifically want a bunch of e-commerce data in your email reports and campaigns, Drip might actually be the best choice, even though it came in at #6. I can only provide you with the most precise information I have. The rest depends on you. But hey, you’re clever! I have faith in you. You’re going to achieve great things, make a lot of money, and go far. Look at our complete reviews for a thorough analysis of each service. All the best with your marketing adventure!FAQ

What is the best free email marketing service?

For the best ongoing free plans, I’d suggest checking out Sendinblue or Benchmark Email. You’ll get access to all the features you need without having to pay for any unnecessary extras. Brevo’s free plan allows you to send up to 300 emails a day, while Benchmark’s free plan gives you access to 24/7 customer service.Is email marketing still effective?

Yes! In fact, studies show that email gives you the best return on any marketing investment. To optimize your email sends and make sure you’re reaching the right people at the right time, an email marketing service is the best option. Services like Constant Contact, GetResponse, and Brevo (formerly Sendinblue) can help you craft professional and effective email campaigns in minutes. Constant Contact even offers you a free 60-day trial (if you’re in the US) so you can give it a go yourself.Which email marketing service is best for small businesses?

Services like ActiveCampaign, Constant Contact, and Brevo provide the best email marketing tools for small businesses and all give you the ability to scale up as you grow. If you’re not sure which service is right for you, we’ve put together a list of the best email marketing solutions for small businesses to help you decide.How do you make money with email marketing?

The best way to make money with email marketing campaigns is to integrate your emails and with an e-commerce platform like Shopify. Some email marketing services support e-commerce more than others. For example, GetResponse can help you monetize and manage your email marketing with an automated sales funnel generator, and with an impressive 99% deliverability rate, you can be sure your customers are getting your mail.Can you do email marketing on Wix?

Wix may be best known as a website builder – perhaps because of its attractive free plan – but the platform also provides an email marketing service called Wix Shoutout. It’s perfect for small businesses, with designer-made templates and an intuitive email editor.What is the best email marketing software for Shopify?

There are many email marketing services that allow you to integrate your campaigns with Shopify, but some are better than others. I’d check out this list of the top five Shopify email marketing integrations to help you pick the right one for your business.Which email marketing service is the best?

If you’re looking for an email marketing service that can do it all – help you grow your business, improve deliverability, increase open rates and click-throughs – ActiveCampaign has it all covered. Plus, it’s the easiest email marketing service to use and it offers a free trial for you to try out its features before committing to a long-term plan.- ActiveCampaign

- Brevo (formerly Sendinblue)

- AWeber

- Omnisend

- Mailchimp

- GetResponse

- Constant Contact

- Benchmark

- Drip

- Moosend

- The Global Email Marketing Services Comparison Project – How We Did It

- Which Are the Top Email Marketing Services Available Today?

- If You Want to Test an Email Marketing Service, You’ve Got to Actually Use It

- Results and Analysis – How Did the Services Actually Do?

- Features

- Features – Bottom Line

- Deliverability

- Analytics

- Support

- Pricing

- Final Conclusions and Recommendations

- FAQ

So happy you liked it!

We check all comments within 48 hours to make sure they're from real users like you. In the meantime, you can share your comment with others to let more people know what you think.

Once a month you will receive interesting, insightful tips, tricks, and advice to improve your website performance and reach your digital marketing goals!