Key Takeaways:

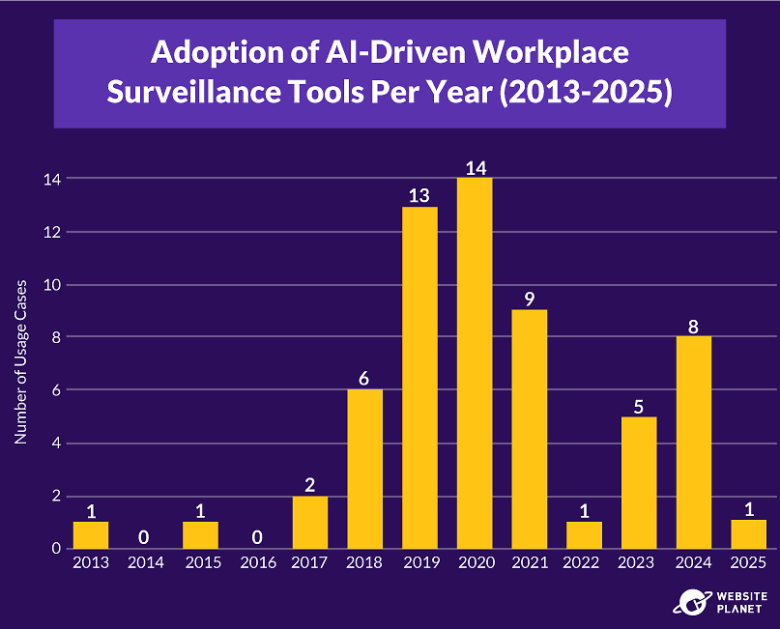

- The pandemic fueled the adoption of AI-driven employee surveillance with 84% of cases occurring from 2019 onwards.

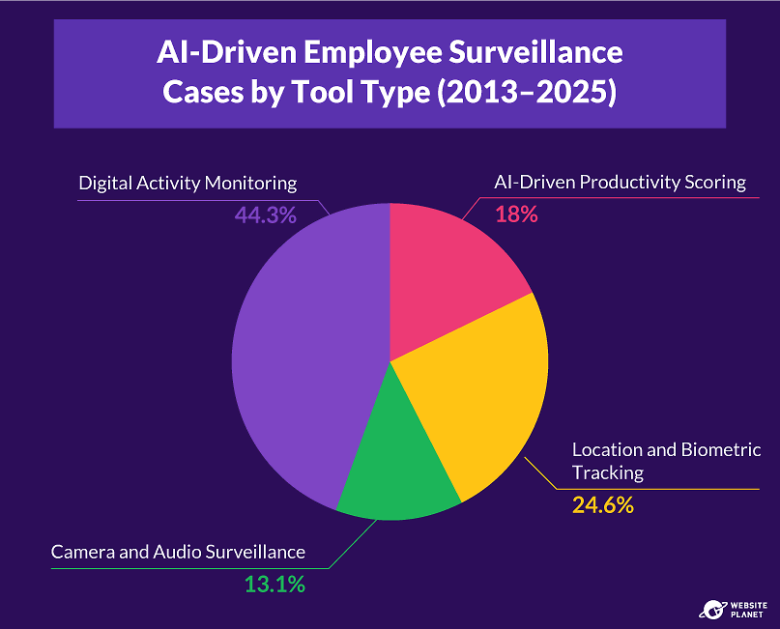

- In 44% of the instances we studied, companies used AI to monitor digital activities and behaviours, such as keystrokes and idle times.

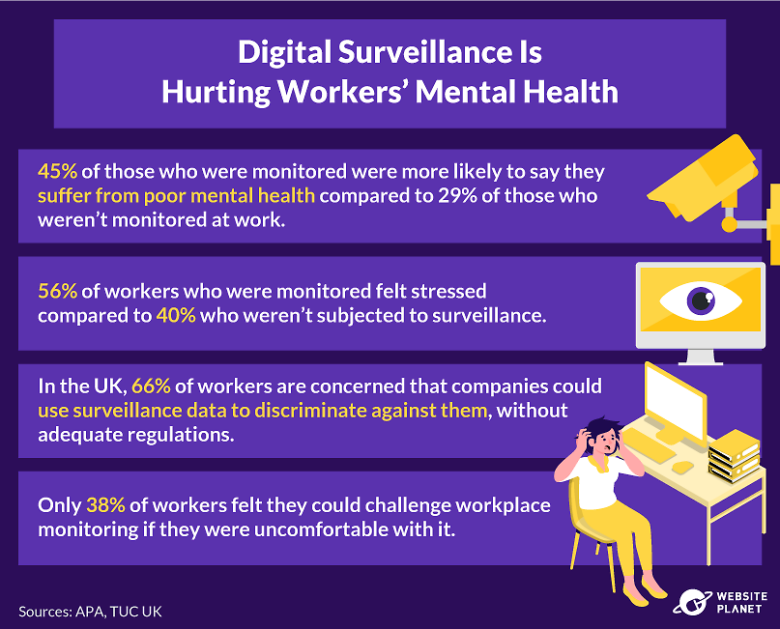

- Workers under surveillance were 1.5 times more likely to say their mental health is poor compared to those who weren’t monitored.

- The EU leads in AI-specific regulations by establishing the AI Act, while the US, Canada, and the UK lag behind with a patchwork of regulations that don’t always fully protect workers’ rights and privacy.

Digital workplace surveillance isn’t a new trend — but the use of artificial intelligence (AI) to power surveillance is. AI can now analyze every login, inactive sessions, keystrokes, mouse clicks, and camera footage to determine “how good” you are at your job.

However, pairing monitoring activities with AI models raises legitimate privacy and ethical concerns, especially when management decisions are informed by AI systems that are susceptible to algorithmic flaws and biases. These concerns are more serious when AI fully automates decisions without adequate human oversight.

Given the growing prevalence, we at Website Planet conducted research on the growing adoption of AI surveillance and its impact on employees. We also studied various jurisdictions to understand how regulated this industry really is and whether workers’ rights are adequately protected.

AI-Powered Workplace Surveillance Is Growing

The adoption of AI-powered employee surveillance was accelerated due to the need to monitor remote workers during the pandemic. According to a report published by Gartner in 2022, before the pandemic, only 30% of large businesses used technology to monitor workers. However, that percentage is now estimated at 70%.

By incorporating AI into surveillance tools and processes, businesses can easily track you and your activity — from keystrokes and conversations to movement history and biometric data. While it’s understandable that employers want to make sure employees are doing their jobs in the best possible way, where should the line be drawn? At what point does supervision become an invasion of privacy, even if you’re on the clock?

For our research, we considered 61 documented instances of AI usage in workplace monitoring, involving 30 corporations such as Microsoft, Amazon, Uber, Barclays, and AstraZeneca. These cases span from 2013 to 2025 and cover a range of industries, primarily in the US, Canada, the UK, and the European Union (EU).

Since businesses rarely disclose the use of AI in employee surveillance, our dataset mainly consists of cases covered by the press, social media, and other online sources. Typically, these cases involved conglomerates and medium-sized companies.

Once we had our dataset, we classified each instance of AI surveillance usage into one of the following categories, as we wanted to see what type of data these companies were collecting:

- Digital Activity Monitoring – Tracking device usage and digital behaviors, such as websites visited, applications used, emails sent, and keystrokes, to assess productivity or security breaches.

- Camera and Audio Surveillance – Recording video or audio to monitor behavior, promote safety, or enforce compliance with company policies.

- AI-Driven Productivity Scoring – Analyzing patterns and digital behaviors to determine a group or individual’s productivity level.

- Location and Biometric Tracking – Monitoring physical location and movement via GPS, RFID, or similar tools, and capturing biometric data (such as fingerprints and videos for facial recognition) to determine attendance, control access, or verify identity.

Our Findings: What Are Companies Tracking?

Our analysis revealed that 44.3% of the cases involved companies tracking their employees’ online activities and digital behaviours. This surveillance form is relatively easy to implement, as many applications now come with built-in AI features with monitoring capabilities. Plus, it’s the simplest way—though not the most accurate—to track productivity and identify non-compliant employees.

In nearly a quarter of the cases studied, companies relied on AI surveillance tools to monitor their employees’ locations and biometric data. These companies typically operate in the retail or logistics industry, where it’s critical to monitor their fleets and assets.

However, this form of surveillance can be problematic because of algorithmic biases. For example, an AI video-recognition software could interpret a woman’s facial expression differently than a man’s. Such systems are also ill-equipped to understand social context and could flag normal behaviours, leading to unfair assessments.

Meanwhile, AI-driven productivity scoring tools, a relatively new technology in monitoring, make up almost a fifth of implementation cases (18%). This suggests that companies are keen to maximize productivity levels, and that they are using these scoring tools to target low-performing teams or areas.

Yet, productivity scores can be misleading. They don’t consider time spent thinking, offline discussions, and contributions when making decisions, solving problems, and generating ideas. Basing productivity levels on keystrokes, mouse clicks, and screen captures also fails to capture the quality and impact of an employee’s work.

The graph above shows the distribution of the cases we considered for this study. As you can see, it suggests that the pandemic accelerated the adoption of digital worker surveillance due to work-from-home policies. In 2022, there was a drop in reported cases, likely due to more employees going back to the office under return-to-office mandates.

As workplaces got back to business as usual, there seemed to be less employee backlash against AI surveillance reported by the media and online sources. However, this doesn’t necessarily imply that companies weren’t adopting AI — it may only reflect a decline in reported usage. Subsequent years saw an increase in reported cases, suggesting that AI-based surveillance is here to stay.

Notably, the use of AI-driven productivity scoring tools was first reported in 2017, and we’ve seen usage cases almost every year except in 2019 and 2022. This fairly consistent trend suggests a sustained interest from companies to incorporate AI into their performance management strategies.

Notable Cases of AI-Driven Worker Surveillance

We’ve seen several high-profile cases of corporations implementing AI-based monitoring in the last few years. Some still use these monitoring technologies despite public scrutiny, while others have stopped using them after company-wide complaints and media coverage.

Let’s go over a few of the most notable instances of AI-surveillance considered in our research.

Amazon: Monitoring Breaks and Recording Driver Behaviours

Amazon has frequently found itself under the spotlight for controversial digital employee monitoring practices, which are still in use. One of the company’s surveillance systems tracks “Time off Task”, which measures how long and how often warehouse workers take breaks. First reported in 2017, the system sends automatic alerts when their productivity is low, allowing management teams to target, reprimand, or even fire low-performing workers.

Delivery drivers also face similar scrutiny. In 2021, the company installed AI-enabled cameras inside vans, capturing and analysing a driver’s every move. Drivers had to either resign or sign a consent form allowing the company to track their location, driving speed, traffic violations, and risky driving behaviours.

Microsoft: Scoring Individual Productivity Levels

Faced with backlash from privacy campaigners, Microsoft had to revise its built-in evaluation tool. This tool measured how productive a worker was. The score was calculated based on data such as number of emails sent, how active a worker is in group chats, or how often they worked on a shared document.

By the end of 2020, Microsoft announced that it had removed individual-level scores and displayed metrics for the whole organization instead.

Barclays: Monitoring Time at Desks With Automated Warnings

The UK bank tracked workers’ computer usage and alerted them when they didn’t spend enough time working, making employees feel uncomfortable going for breaks. First reported by a whistleblower in 2020, the company has since scrapped the surveillance system.

Alas, this wasn’t the bank’s first attempt at employee surveillance. In 2017, Barclays installed heat and motion sensors to determine whether desks were being used, claiming it was using the data to calculate office space utilization. However, after much criticism, the bank abandoned the initiative.

Expo Dubai 2020: Tracking Employee Health With Wearables

Over 5,000 construction workers for the Expo Dubai 2020 event were part of a health monitoring program. Workers had to wear WHOOP straps, which monitored their heart rate and sleep quality. Employers allegedly used this data to identify workers who needed to be more cautious at work due to underlying health conditions.

While the goal may be to improve health outcomes, some employees may perceive company-issued wearables as an invasion of privacy.

Uber: Automated Profiling and Decision-Making

In 2021, an Amsterdam court ordered Uber to reinstate five drivers and pay them damages after dismissing them based “solely on automated processing, including profiling.” The drivers had argued that the company didn’t provide evidence of their wrongdoings and that the decisions were based on flawed technology.

Walmart, Delta, T-Mobile, Chevron, Starbucks: Message Surveillance

Corporations such as Walmart, Delta, T-Mobile, Chevron, and Starbucks are using a startup’s (Aware) digital surveillance solutions to monitor messages. Aware’s technology aims to gauge workers’ sentiments in real time and to monitor for improper employee behaviors.

Although the analytics tool anonymizes employees, Aware also offers a separate solution to drill into individual conversations if needed, heightening privacy concerns.

The Impact of Digital Surveillance on Workers

While digital monitoring strategies benefit employers through gained efficiencies, improved productivity levels, and optimized resources, the same cannot be said for employees.

A 2023 American Psychological Association (APA) report concluded that work monitoring technologies negatively affect workers’ wellbeing. APA found that employees who worked in monitored environments were more likely to feel burnt out, emotionally exhausted, and less motivated to do their best in their jobs than those who weren’t monitored.

Another study confirms the link between digital workplace surveillance and employee mental health. Considering the experiences of over 3,500 Canadian employees from various industries and professions, the results showed that digital surveillance is indirectly linked to higher psychological distress and lower job satisfaction due to elevated job pressures, lower job autonomy, and invasions of privacy.

What’s interesting is that there’s evidence to show that employee monitoring may not necessarily translate to increased worker compliance or productivity. A Harvard Business Review experiment found that employees were more likely to cheat or break the rules when they knew they were being monitored than when knowing they weren’t monitored.

The study postulates that compliance relies on several factors beyond a reward and punishment system. Employees who are being monitored tend to feel less responsible for their actions, and they are more likely to say that the managers monitoring them were responsible for the employees’ recorded behaviours.

Moreover, some employees may try to appear to be more productive when placed under surveillance. An ExpressVPN survey found that nearly one in four employees fake being busy, using tactics such as keeping unnecessary apps open, scheduling emails, and logging in from their mobile devices.

Legislations and Guidelines on Digital Workplace Surveillance Is Evolving

Workplace surveillance regulations vary significantly across the US, Canada, the UK, and the EU (which are the markets considered in our research). Although there has been some advancement in legislation, these jurisdictions seem to be struggling to keep pace with AI surveillance advancements.

So far, the result seems to be a fragmented patchwork of regulations that often falls short of fully protecting workers’ rights and privacy. Below you’ll find a brief overview of the regulatory landscapes in these four jurisdictions.

United States

Legislation around the use of AI in employee surveillance is fragmented, as there is no overarching federal law regulating AI usage. The Electronics Communications Privacy Act (ECPA) allows employers to track communications on business-owned devices when there is a legitimate business reason for it. Whether employers need to notify employees that they are being monitored (and obtain their consent to do so) is dictated at state level.

For instance, private-sector employers in New York must submit a written notification to employees upon hiring, before surveilling emails, online activities, and device usage. In California, employers must inform their employees that they are being monitored and provide an explanation on how their data will be used and protected. Meanwhile, the states of Connecticut and Delaware require employees’ written consent for workplace surveillance.

Canada

Like the US, Canada lacks national legislation to govern the use of AI. However, there has been some progress with the proposal of the Artificial Intelligence and Data Act (AIDA), intended to regulate the adoption of AI systems.

Currently, federally regulated employers must comply with the Personal Information Protection and Electronic Documents Act (PIPEDA) when managing employee data. Meanwhile, private-sector employee data is governed by each province’s privacy laws, resulting in an inconsistent approach to workplace surveillance across Canada.

United Kingdom

The UK is working on a bill to govern AI usage. Data protection generally falls under the General Data Protection Regulation (GDPR) and Data Protection Act. Workers have the right to know about any monitoring initiatives and the extent to which they are being monitored regarding emails, CCTVs, and other forms of surveillance.

However, current regulations do not directly address issues concerning algorithmic bias and automated decision-making in the workplace.

European Union

The EU is generally considered as having the most stringent law, focusing heavily on surveillance impact, employee privacy, and employer transparency. Workplace surveillance falls under the jurisdictions of the General Data Protection Regulation (GDPR) and the newly enacted AI Act — the first legal framework to regulate AI usage.

The AI Act 2024 uses a risk-based approach, where AI systems are categorised into four levels: unacceptable risk, high risk, low risk, and minimal risk. An example of an unacceptable risk is running an emotion recognition analysis on employees or using biometric data to estimate specific characteristics of a person.

AI tools for hiring and managing employees are considered high risk and need to comply with stringent rules before deployment, such as having data traceability features, appropriate human oversight, and sufficient risk assessment and mitigation strategies.

Where Do We Go From Here?

The lack of transparency around AI surveillance usage, data storage, and security protocols presents a power imbalance between employers and employees. Moreover, algorithms are not infallible. When algorithmic decisions are made without human supervision, employees could be subject to automated bias, systemic discrimination, and privacy violations.

Addressing these issues should balance the protection of employee rights without stifling innovation in the AI field. It needs a combination of improved regulation and workplace policies, employer transparency and accountability, and employee representation.

According to guidelines from the UK’s Information Commissioner Office (ICO), organizations need to ensure that the use of AI applications are lawful, transparent, fair, and secure. Several recommendations include:

- Explaining AI decisions to employees.

- Disclosing the data that AI will collect, the purpose, the retention period, and who has access to it.

- Securing data and preventing unauthorized access, data loss, or damage.

- Ensuring employee consent is voluntarily given, specific, and informed.

Employers should also vet AI providers, prioritize human oversight, regularly review their monitoring policies and AI tools for bias, implement risk-mitigation strategies, and train HR teams so they can adequately interpret information from AI surveillance systems.

As an employee, you should understand your rights, ask questions when in doubt, and engage with relevant authorities when an issue arises.

The Bottom Line

Employee surveillance has evolved beyond CCTV cameras and basic device monitoring. Algorithmic decisions are now influencing resource allocation, hiring processes, and performance management. While AI surveillance tools deliver many benefits for employers, it’s also eroding employee trust and mental wellbeing.

Thus, much needs to be done to regulate AI-driven employee surveillance better. In many cases, the onus falls on employees to recognise privacy violations and advocate for themselves. Stronger regulations, clearer employer policies, and regular AI audits must be part of any responsible solution.

Ultimately, using AI to judge an employee’s true value is an extraordinary — if not an impossible — task because humans are inherently complex. As AI-powered surveillance becomes more sophisticated, it’s more critical to protect workers’ rights to avoid a dystopian future in which a piece of software code can determine one’s performance, position, or salary.