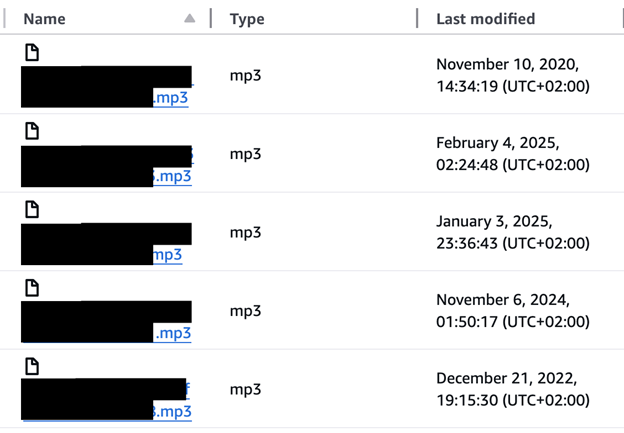

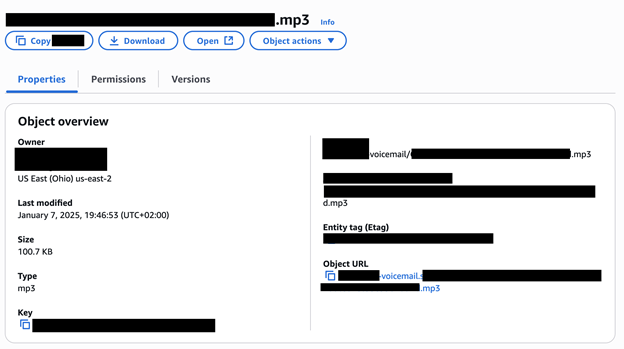

The publicly exposed database was not password-protected or encrypted. It contained 1,605,345 audio recordings in .mp3 format. In a limited sampling of the exposed files, I heard audio recordings that mentioned PII (such as names and phone numbers) and the reason for the call. The data in question appeared to come from a storage repository containing recordings of incoming calls and voice messages, which appear to have been collected from 2020 to 2025.

Information inside the database and the audio recordings indicated the records belonged to numerous gyms and fitness centers throughout the US and Canada. Based on a limited sample of the audio recordings, the vast majority of the calls I heard appeared to reference several of the largest and most well-known fitness brand names. I immediately reached out to the corporate privacy team from one of these organizations, who confirmed that they do not record audio, but some independent franchisees were using a third-party solution for this purpose. After launching an internal investigation and identifying the third-party contractor, the database was restricted from public access and no longer accessible.

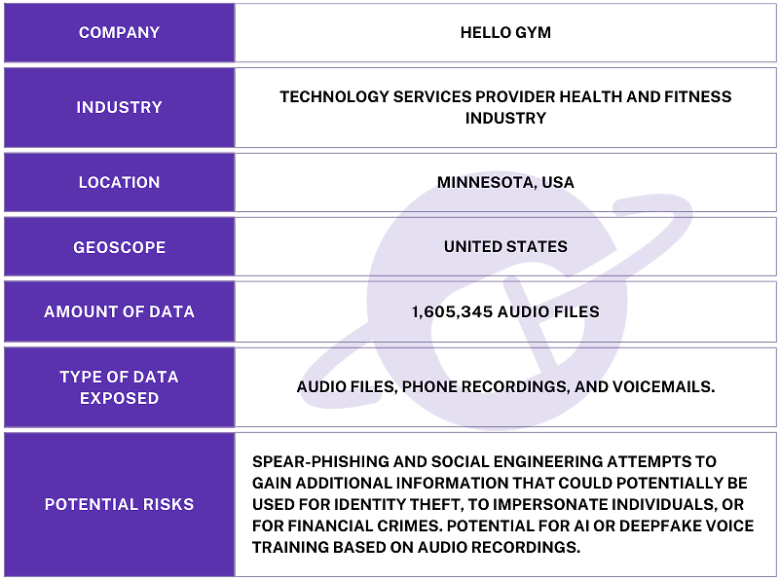

Although the records appeared to contain calls and voicemails belonging to a number of franchise locations of several well-known fitness brands, the database was managed by a third-party contractor known as Hello Gym. This was confirmed to me by multiple franchisees and at least one corporate representative. At the time of my discovery, Hello Gym publicly listed names and logos of multiple fitness center brands that matched those in the audio files I heard. The database was secured within hours of my responsible disclosure. It is not known how long the database was exposed before I discovered it or if anyone else may have gained access to it. Only an internal forensic audit could identify additional access or potentially suspicious activity.

Hello Gym is a Minnesota-based communication and lead management platform that caters specifically to the fitness industry. Their services aim to help gyms and fitness studios capture sales leads, manage calls, automate follow-ups, and boost member engagement. Hello Gym also provides VoIP phone services, automated outreach, and other sales tools. The exposed database was likely a storage repository for the VoIP audio files and intended for internal use.

There are numerous potential risks in the exposure of internal audio recordings of staff, clients, and prospective members. The voicemails that I heard should not have been publicly accessible, as they often included personal details such as names, phone numbers, and the reasons for calling. These reasons were most commonly related to billing issues, payment information updates, or membership renewals.

In this case, for instance, scammers could hypothetically impersonate a gym staff member and call a client using the information from the voicemail, asking them to provide updated credit or debit card details or pay a fraudulent cancellation fee. This could be considered a type of “man-in-the-middle” attack. The client would have little reason to doubt that the request is legitimate, as the criminal would have personalized and insider information that no one except the gym and the member should know, including the date and time of the call, among other details.

Another troubling trend among cybercriminals is to build a complete profile of potential victims by cross-referencing data from the dark web or other data breaches, aiming to identify public figures or high net worth targets. By this logic, each data point could serve as a puzzle piece to create a full picture of a potential victim—and cybercriminals having audio recordings of their voice only increases the potential risks.

While conducting an assessment of the exposed database, I also heard calls to the corporate and internal client services department from gym staff. These employees provided their names, gym number, and personal passwords to verify themselves before requesting refunds or account changes for members. This poses a potential risk of cybercriminals impersonating employees with valid credentials.

In another recording, a gym manager called a security monitoring service to disable the alarm for testing purposes. They provided their name, location, and password credentials. Hypothetically, someone could use this type of information in an attempt to disable the security alarm and gain unauthorized physical access to a gym after business hours.

I am not claiming that any of the customers of Hello Gym’s services, including clients, members, or staff are at risk of these types of threats. I am only identifying hypothetical real-world scenarios of how this information or biometric voice data could be potentially used by criminals.

The publicly exposed database was not password-protected or encrypted. It contained 1,605,345 audio recordings in .mp3 format. In a limited sampling of the exposed files, I heard audio recordings that mentioned PII (such as names and phone numbers) and the reason for the call. The data in question appeared to come from a storage repository containing recordings of incoming calls and voice messages, which appear to have been collected from 2020 to 2025.

Information inside the database and the audio recordings indicated the records belonged to numerous gyms and fitness centers throughout the US and Canada. Based on a limited sample of the audio recordings, the vast majority of the calls I heard appeared to reference several of the largest and most well-known fitness brand names. I immediately reached out to the corporate privacy team from one of these organizations, who confirmed that they do not record audio, but some independent franchisees were using a third-party solution for this purpose. After launching an internal investigation and identifying the third-party contractor, the database was restricted from public access and no longer accessible.

Although the records appeared to contain calls and voicemails belonging to a number of franchise locations of several well-known fitness brands, the database was managed by a third-party contractor known as Hello Gym. This was confirmed to me by multiple franchisees and at least one corporate representative. At the time of my discovery, Hello Gym publicly listed names and logos of multiple fitness center brands that matched those in the audio files I heard. The database was secured within hours of my responsible disclosure. It is not known how long the database was exposed before I discovered it or if anyone else may have gained access to it. Only an internal forensic audit could identify additional access or potentially suspicious activity.

Hello Gym is a Minnesota-based communication and lead management platform that caters specifically to the fitness industry. Their services aim to help gyms and fitness studios capture sales leads, manage calls, automate follow-ups, and boost member engagement. Hello Gym also provides VoIP phone services, automated outreach, and other sales tools. The exposed database was likely a storage repository for the VoIP audio files and intended for internal use.

There are numerous potential risks in the exposure of internal audio recordings of staff, clients, and prospective members. The voicemails that I heard should not have been publicly accessible, as they often included personal details such as names, phone numbers, and the reasons for calling. These reasons were most commonly related to billing issues, payment information updates, or membership renewals.

In this case, for instance, scammers could hypothetically impersonate a gym staff member and call a client using the information from the voicemail, asking them to provide updated credit or debit card details or pay a fraudulent cancellation fee. This could be considered a type of “man-in-the-middle” attack. The client would have little reason to doubt that the request is legitimate, as the criminal would have personalized and insider information that no one except the gym and the member should know, including the date and time of the call, among other details.

Another troubling trend among cybercriminals is to build a complete profile of potential victims by cross-referencing data from the dark web or other data breaches, aiming to identify public figures or high net worth targets. By this logic, each data point could serve as a puzzle piece to create a full picture of a potential victim—and cybercriminals having audio recordings of their voice only increases the potential risks.

While conducting an assessment of the exposed database, I also heard calls to the corporate and internal client services department from gym staff. These employees provided their names, gym number, and personal passwords to verify themselves before requesting refunds or account changes for members. This poses a potential risk of cybercriminals impersonating employees with valid credentials.

In another recording, a gym manager called a security monitoring service to disable the alarm for testing purposes. They provided their name, location, and password credentials. Hypothetically, someone could use this type of information in an attempt to disable the security alarm and gain unauthorized physical access to a gym after business hours.

I am not claiming that any of the customers of Hello Gym’s services, including clients, members, or staff are at risk of these types of threats. I am only identifying hypothetical real-world scenarios of how this information or biometric voice data could be potentially used by criminals.

Potential AI Voice Cloning Risks

Cloning voices used to seem like something out of a science fiction movie, but now technology has provided average people (and criminals) with the ability to create text, audio, and videos that are often hard to identify as fake. In 2023, researchers at Microsoft announced the development of an AI tool called VALL-E-2, which was able to achieve human voice cloning results with just 3 seconds of audio. The fact that AI models are capable of cloning voices with a high level of accuracy is terrifying, especially considering that many companies often record our conversations. (We have all called a business or organization and heard the message “Your call may be recorded for quality and training purposes.”) In such instances, consumers or clients generally have little or no control over how long those files are kept—not to mention the potential outcomes should these recordings fall into the wrong hands. There are already numerous cases of biometric voice data being weaponized by cybercriminals to carry out sophisticated social engineering attacks. In 2019, scammers cloned a German CEO’s voice to trick a UK energy executive into transferring $243,000, believing he was following direct orders. In 2024, criminals in Hong Kong created deepfake audio and video of corporate executives, which led the employee to transfer $25 million. In 2025, a Florida woman sent $15,000 dollars to criminals after they used social media videos to clone the voice of her daughter crying for help. These attacks only highlight the potential risks and creativity of criminals with access to AI technology. I am not saying or implying that the voice recordings of gym members or staff are or were ever at risk of deepfake cloning or misuse. I am only providing hypothetical risks and examples of prior documented deepfake cases exclusively for educational purposes. In the United States, voice data could be considered both personal and biometric information, depending on how it is used, how it is collected, and other factors. According to a policy statement from the Federal Trade Commission on Biometric Information, Section 5 of the Federal Trade Commission Act recognizes voice recordings as biometric data when voiceprints can be used to identify individuals. Moreover, at the state level, there are a patchwork of laws—like Illinois’ Biometric Information Privacy Act (BIPA) and similar regulations in Texas, Washington, and California—that indicate voiceprints or voice data are considered to be biometric identifiers. Although there are no comprehensive federal privacy laws currently covering voiceprints, these regulations suggest a growing recognition that, in the age of AI and audio deepfakes, voice data could and should be categorized and regulated as “sensitive” in the near future at the federal level.What You Can Do

My advice to anyone who may believe that their personal information or biometric voice data has potentially been exposed in any data breach is to take steps to protect themselves. The first step is to understand the potential risks of phishing and social engineering attacks. It is estimated that nearly 98% of all cyberattacks rely on some form of human interaction and social engineering. As criminals become more creative and have easier access to sophisticated AI tools, their success rates will ultimately increase at the expense of their victims. Knowing the most common scams and methods criminals use is crucial when it comes to protecting yourself. The FTC provides great resources to learn about the latest scams, how to avoid falling prey to them, and how to report them to law enforcement. As a general rule, always be skeptical of unexpected or unsolicited phone calls. In the new era of AI-powered criminals, even if the voice sounds familiar or the caller references account details or membership information, you should always validate that the person is who they say they are as a first step. I personally have a codeword with my family and close associates so that if they ever get a call from someone claiming to be me and the person doesn’t know the codeword, it is not me. Another suggestion is to never share sensitive personal information or payment details over the phone unless there are no other options. Always use official communication channels when providing personal details, use payment portals from the company’s official website, or make payments in person when possible. When we think of fitness gyms and exercise, cybersecurity is usually not the first thing that comes to mind. This is another reminder that any physical business that collects and stores data is also a technology company in a sense. Offline businesses (like those in the fitness industry) must take data protection into account as part of their trade. My advice to any organization that collects customer or client information, PII, biometric data, and other potentially sensitive records is to:- Use encryption to make those files non-human readable. This ensures that, if data is exposed accidentally or through malicious actions, there is an additional layer of security as it is much more difficult to decrypt those files.

- Conduct penetration and vulnerability testing. This can help companies identify weaknesses or misconfigured storage systems that expose data and allow public access. Implementing access controls to specific authorized internal users on a time-limited basis is also a good idea. It can help prevent mistakes or limit potential insider attacks.

- Segment data that is not in use. Far too often I see organizations storing years’ worth of records in a single database and not deleting old files. As a general rule, it is a good strategy to securely back up old data to limit the exposure in the event of a data incident.

- Choose your partners wisely. When choosing a third-party service, it is a good idea to ask questions about data protection and security to ensure any potential partner is compliant with the needs of your business or industry. Most service providers are happy to share information about their data security policies and the procedures they have in place to ensure sensitive data is protected.

Website Planet’s Recent Publications

At Website Planet we work with an experienced team of ethical security research experts who uncover and disclose serious data leaks. Recently, cybersecurity expert Jeremiah Fowler discovered and disclosed a non-password protected database which exposed nearly 1 million records apparently belonging to Ohio Medical Alliance, an organization that helps individuals obtain physician‑certified medical marijuana cards.He also found another unsecured database totaling 378.7 GB that appeared to belong to Virginia-based Navy Federal Credit Union.